Good Morning,

The first video in a series of videos around career, product, and growth is out 🔥.

Everything significant in our lives starts with a goal. So we should set it right. For that reason, I have chosen the topic “7 mistakes people make while setting goals/OKRs” 🎯

Here is the video —

Coming to the topic,

This is the first post on experimentation and user research topic. In this post, we will cover an introduction to a/b tests, key terms, types of a/b tests, and how to conduct them. In future posts, we will cover

Where and How Not to Do Experiments. Here we will cover AA tests, separating out assumptions and other things that help you do the A/B tests the right way.

How to Generate Ideas of Experimentation. It is important to get the right ideas for experimentation. One of the key methods here is user research which we will detail in the next post.

Doing User Research the Right Way. Benefits and pitfalls of user research methods like usability tests, contextual interviews, focus group discussions, etc.

So where do we start for this post?

It’s the US election season, so let’s start there! 😁

In 2008, Barack Obama made history. He was the first African-American US president to be elected. But he had a unique challenge when he was running for the nomination back in 2007 — most people hadn’t heard of him. Obama's campaign had to get support and raise money from all these people.

Dan Sirokar, who later co-founded Optimizely, was the Director of Analytics for the Obama 2008 campaign. Dan had been a product manager at Google before, and his role in the Obama campaign was to maximize voter registration, email sign-ups, and donations through the official website by leading a team of software engineers and analysts.

One of the key things that worked for the Obama Campaign was to experiment with images and words that appealed to the voters and donors. The experiment tested two parts of the first page visitors saw: the “Media” section at the top and the call-to-action “Button”.

They tried four buttons and six different media (three images and three videos).

Button variations

Media variations

The results were stunning. The winning media+CTA variation had a sign-up rate of 11.6% as compared to the original page which had 8.26%. It resulted in additional 2,880,000 email addresses, which translated into an additional $60 million in donations. The winner page was,

If you are old enough to remember Obama’s key message that election, it was “Yes, We Can Change”. Not far from winning variation on the website? 😊

Join 3,000+ smart, curious folks who have subscribed to the growth catalyst newsletter so far by subscribing👇

What’s A/B Testing?

A/B testing is nothing fancy. It’s testing different variations of elements of a website or app like image, text, web page, sign-up forms, payment flows, etc.

The term A/B Testing denotes ‘testing whether should we go for option A or option B?’. Options A and B are variations of the elements.

Once you understand this, it’s a no brainer that all software products should do A/B testing to ensure they build the best version of their websites/apps for the users.

So where do we start? By understanding a few fancy terms so that you don’t feel like a muggle when people discuss A/B testing in a room.

Key Terms of A/B Testing

There are 4 key concepts you need to understand when it comes to A/B testing.

Control and variation

The goal of the test

The null hypothesis

Sample size, and confidence level

Control and Variation

As the term suggests, control is the current version available to all users on the product.

Variation is what we want to change in the product and test whether it’s better than the control.

The Goal of the Test

The goal of the a/b test is what matters to you and the reason why you are doing the a/b test in the first place. For example, the goal of the test in Obama’s campaign was increasing sign-ups. The goal should always be quantifiable.

Null Hypothesis

You will hear these daunting terms often with engineers or data scientists. Let me explain in simple words.

The null hypothesis assumes there is NO (null) difference between control and variation in achieving the goal. For the A/B test to be meaningful, the null hypothesis needs to be proven false by showing that one version is better than the other.

How do we prove that one version is better than the other? Enters sample size and confidence level.

Sample Size, and Confidence Level

We have already discussed sample size in designing surveys for product-market fit.

The sample size is the size of the population you have surveyed. The higher the sample size, the higher the confidence that your results will hold true for the entire population.

The same applies to websites, the higher the number of users who have been through the A/B test, the more confidence you can have whether one variation is better than the other. Let’s take an example.

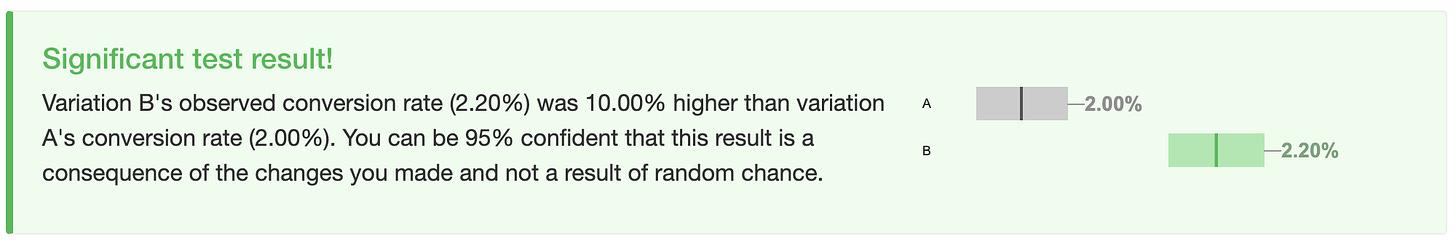

Suppose 5000 people have been through your A/B test. The goal is to increase sign-ups. You have split the users 50-50 in control (A) and variation (B). The results you get is

A — 2500 users — 50 sign-ups

B — 2500 users — 55 sign-ups

Here you can see that B is converting at 2.2% (55/2500), whereas A is converting at 2%. So B looks better. But how confident are we that B is better than A?

You can use this calculator to find the confidence level — https://abtestguide.com/calc/

What if we increase the sample size to 500000

A — 250000 users — 500 sign-ups

B — 250000 users — 5500 sign-ups

Variation B is still converting at 2.2% (5500/250000), whereas A is converting at 2%. What about the confidence level?

What does 95% confidence mean? It means that in 95 of 100 cases, this variation will perform better.

Usually, when do A/B tests, we are looking for 95%+ confidence.

As you can see, the larger the traffic on the website, the quicker you can get the results.

How to Do A/B Tests

More often than not, your company might be using one or other tools of a/b testing. Larger companies built an in-house solution to a/b testing. Smaller ones pick one of the tools available in the market. Some of the popular ones are

All of the three are market leaders in this segment, and you can try either of them.

When Doing A/B Tests

Beginner’s Mind

A/B testing requires a beginner’s mind, i.e. openness to test out ideas that may be unconventional.

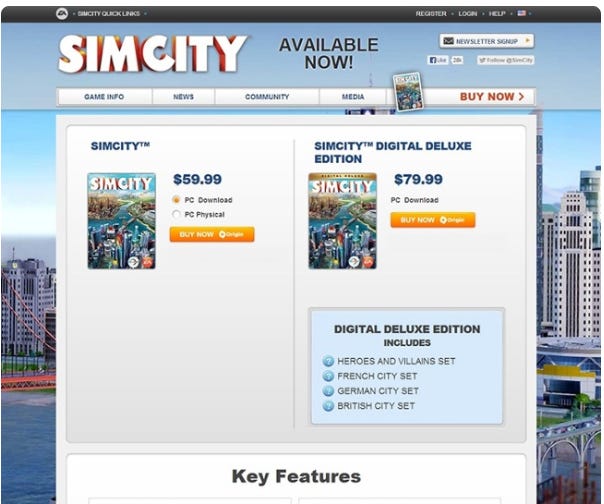

An example of this came when the popular game Sim City 5 was launch. Electronic Arts, the owner of the game, wanted to A/B test different versions of its sales page to identify how it could increase sales exponentially.

Most marketers know that advertising an incentive will result in increased sales. So they offered 20 percent off a future purchase for anyone who bought SimCity 5 on the pre-order page.

The variation didn’t have this incentive.

The variation performed more than 40 percent better than the control. Avid fans of SimCity 5 weren’t interested in an incentive. They just wanted to buy the game.

Testing against conventional wisdom requires a beginner’s mind ✌️

Start Small

The variation should always be made live to a small % of users.

If you are a small-to-midsize company, you can start with 10% of users and see how the variation performs wrt control. if the performance is good, you can gradually keep increasing the variation to 20%, 50%, and eventually 100%.

Larger companies like Facebook and Google have enough users that they can start at 1% and gradually increase it.

You can determine where to start by looking at the population size. If 1% of your users are large enough to give you high confidence results within days or weeks, you should go for 1% first. If not, go for a higher %

What Can You A/B Test, aka Types?

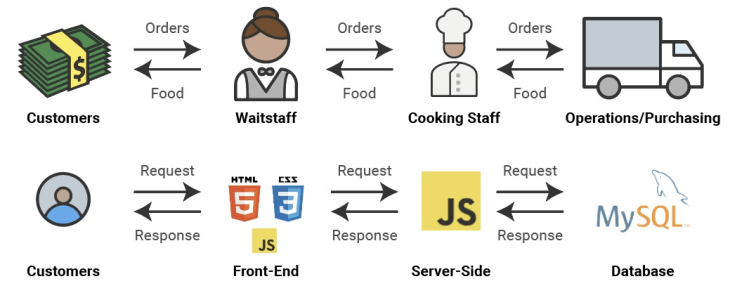

Before we get into the types, it’s important to understand how websites/apps work for non-technical readers. In simple language,

In order to understand how websites work, try to remember the analogy of a restaurant. At the restaurant, the waiter interacts with the end-user. It takes their requests and places the order into the kitchen. The food requires some raw ingredients available in storage area. The chef takes the raw materials, prepares the food, and passes it to the waiter.

As you can see in this analogy, the customer is the end-user. The waitstaff is the front-end or client-side. Food is the website. The shelf or storage area of the restaurant is a database. The chef and database are at the back-end/ server-side.

Client-Side

Usually, ab tests are done on the following elements on the client-side.

copy

design and layout

forms

navigation

Call to action (CTA)

These are all client-side tests and are support by all popular tools. They are also pretty common and easy-to-do because they don’t require any change to your back-end/ server-side logic.

From our earlier example of a restaurant, it is like ordering readymade biscuits. Easy for the waiter to serve 2 varieties of biscuits (A/B) to the customer to see which one they like better and doesn’t require a chef to do it.

Server-side Testing for Algorithms

You can go also conduct server-side a/b tests where the test requires the server to create different variations before it is delivered to the client.

Suppose you are building a recommendation engine for the eCommerce website. You can also a/b test whether the new recommendation algorithm performs better than the existing one by showing them to different sets of users.

You can do the same over the search results as well. These require deep experimentation capabilities and are useful for dynamic websites.

As these tests got popular, tools like Optimisely which started with only client-side testing have started providing provide full-stack testing.

From our earlier example of a restaurant, it is like ordering Omelets. The chef can prepare different Omelets (A/B) and serve it to different customers to see which one they like.

Split URL Testing

URL is the address of a website. Split URL Testing is testing multiple versions of your webpage hosted on different URLs. The main difference between a Split URL test and an A/B test is that in the case of a Split test, the variations are hosted on different URLs.

Split URL testing is preferred when significant design changes are necessary, and you don’t want to touch the existing website design.

It’s like building a variation of the restaurant at a different location and testing if customers prefer one versus the other. You don’t do it often as it requires significant investment.

MultiVariate Testing (MVT)

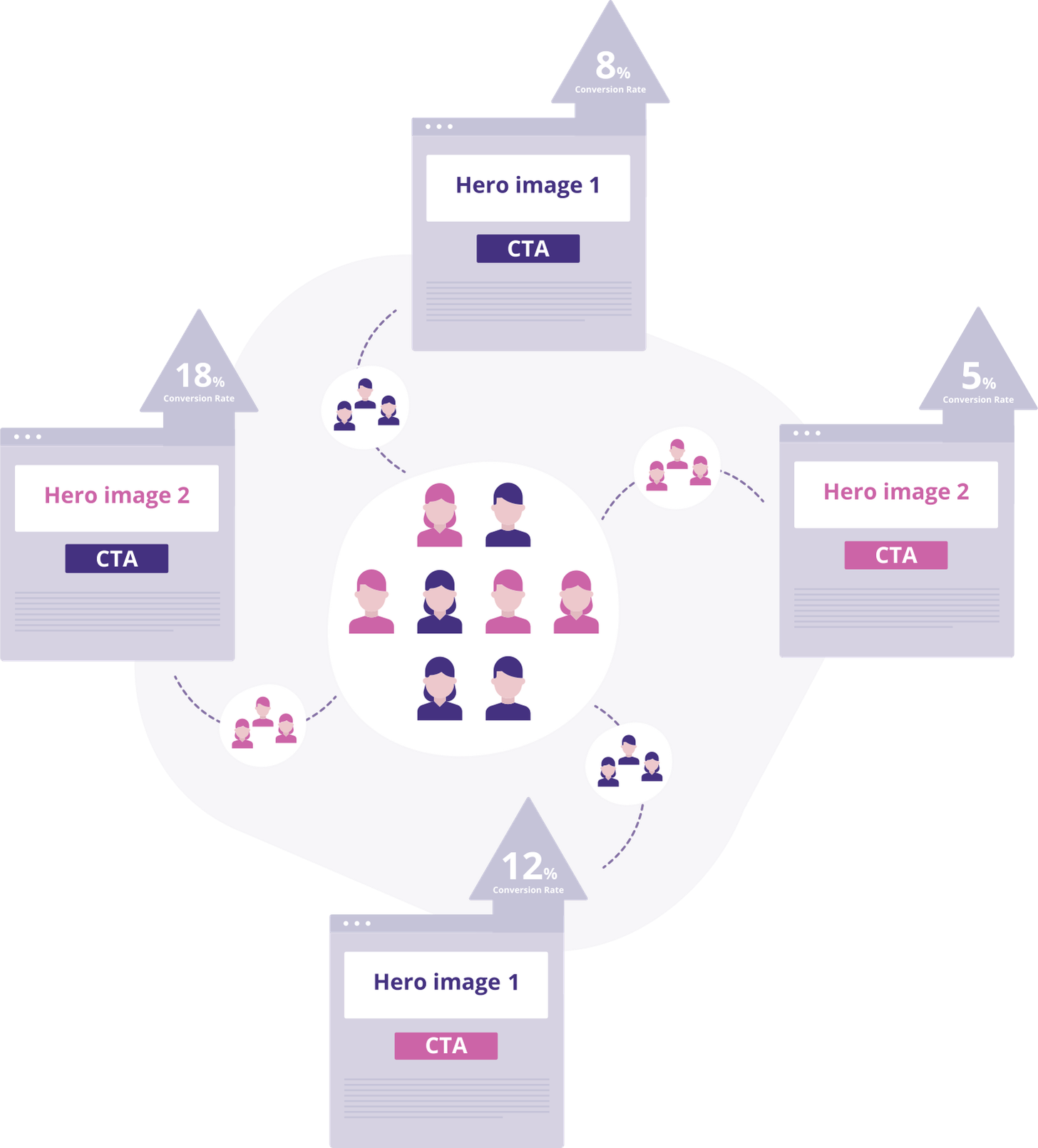

It is a method in which changes are made to multiple sections of a webpage, and variations are created for all the possible combinations of changes.

For instance, you decide to test two versions of each of the hero images and the CTA button color on a webpage like Obama’s campaign. By using MVT, you can create one variation for the hero image and one for the CTA button color. To test all the versions create combinations of all the variations, as shown here:

After running a multivariate test on all combinations, you can use the data to see which one performs best and deploy that. In the image below, the winner is Hero Image 2 + Blue CTA with an 18% conversion.

Multipage Testing

Multipage Testing is a form of experimentation where you can test changes to particular elements across multiple pages.

Suppose you want to introduce social proof (3000+ paying customers) on your website. You add it on every page of the funnel in one variation and remove it in the other. You have gotten multi-page testing now 😊

Join 3,000+ smart, curious folks who have subscribed to the growth catalyst newsletter so far by subscribing👇

This would be all for this post. See you next week,

Sincerely,

Deepak

Another insightful piece Deepak.

Learned more from this newsletter series than those paid courses

Thanks, Deepak