Good Morning,

The world is changing rapidly when it comes to AI. And we have to update our understanding.

Around a year back, I published the book Tech Simplified for PMs and Entrepreneurs, and loved the response from the community. Writing tech in simple language helps people without a coding background build knowledge to do their jobs better.

So I thought of starting this newsletter series of Tech Simplified, where I write about newer topics in Tech. Most recently, the AI fever has caught the world, specifically chatGPT. So there can’t be a better topic to start with. Before we start, an announcement!

The applications for the 2nd cohort of Product-Led Growth are now open! The first cohort had 60 members (limited seats), and all of them are existing PMs and Founders. It’s highly recommended for those who want to learn advanced skills in Product Growth with a super-smart peer group.

Why is this course different?

1st cohort testimonials sessions got over yesterday and testimonials just started flowing in. Here are few testimonials from the 1st cohort to check out 🤓

Read on for more context.

First, most courses around growth are focussed on marketing. This one is a product-first course. PMs and Founders need to have product-led growth because spending money to grow isn’t always a viable option.

Second, courses usually focus on learning but not creating immediate impact in your current job. The content, tools, and templates provided in this course are such that majority of PMs and founders from the 1st cohort built their own product growth plan while getting assistance from me. Building your own product growth plan a part of curriculum, and that’s proof of value right there :)

Third, every session has 20-30 objective questions around frameworks and case-studies that help you evaluate whether you really understood the concepts and can apply it.

Add to that — capstone project, case studies, interview prep for FAANG, community of super-smart folks are other benefits 🔥

You can know more about the course at https://www.pmcurve.com/

I would be doing a weekly shortlist of applications for this cohort and there are limited seats. The first shortlist will go out today evening, so apply today if you are considering it.

It would hard to cover chatGPT in a single post, and that’s why I have taken the onus of creating a 60-80 page guide around it which I am planning to publish by April. In this post, we will cover the key to the power of chatGPT — Large Language Models (LLMs)

Models

To understand what a large language model is, we first have to understand what a model is.

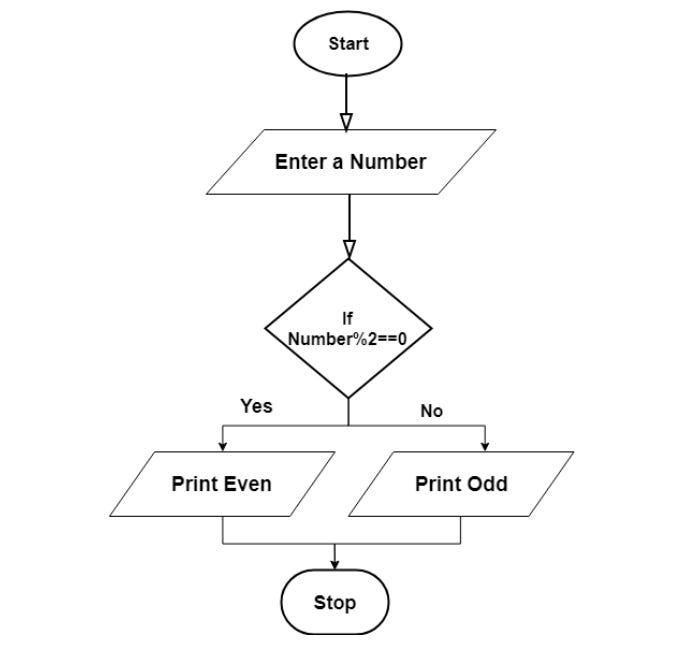

You may have heard of algorithms if you have done basics of computer science. An algorithm is a set of rules that the computer follows to solve a problem. For example, we can have a set of rules to determine whether a given number n is even or odd, aka algorithm. You can see that algorithm below.

Understanding algorithms is prerequisite to understanding models. Many people confuse models with algorithms. Let’s take a machine learning algorithm.

In machine learning, every enthusiast starts with linear regression algorithm. It defines the relationship between one independent variable (x) and one dependent variable (y) using linear equation (= a straight line). It is well known that equation for a line is y = mx + c, which can also be written as y=w2+w1x. The mathematical rule that we just defined is an algorithm. Let’s talk about models now.

A model is when you determine w1 and w2 in the algorithm based on data you have. Suppose we were trying to find the correlation between the # of hours spent studying and marks obtained on the test. In this case, say we had these data points.

0 hours — 0 marks

3 hours — 33 marks

9 hours — 99 marks

We can put the first datapoint (0 hours, 0 marks) in the equation and see that w2=0

0=w2+w1*0

w2 = 0Let’s put another datapoint (3,33)

33=0+w1*3

w1 = 33/3 = 11Now, let’s see if y=11x holds true for the third datapoint (9,99)

y=11*9 = 99So now we have gotten a model, y=11x using which we can predict things. Answer me this

Using y=11x, we can predict that a person studying 5 hours would get 11*5 = 55 marks.

For another test that is tougher, the data might be different. Maybe you take 5 hours to secure only 20 marks there. The model for that test would also be different.

Few definitions now that we understand what a model is:

— w1 and w2, whose value is estimated using data, are called parameters of the model

— Using data to determine various parameters of the model is called training the model

— Using new data that model wasn’t trained on to measure how model performs is called testing the model

So to create a model, we need an algorithm as the base and data to train the model. We also test the model before we make it live to ensure it performs well.

Another important point to note here is we can employ a different algorithm on the above data to create a different model. So, we can generate a new model with the same algorithm with different data , or a different model from the same data with a different algorithm.

Now that you have understood models, let’s jump to language models.

Langauge Models

Have you noticed the ‘Smart Compose’ feature in Gmail that gives auto-suggestions to complete sentences while writing an email? How does the ‘Smart Compose’ feature works? In the simplest terms, it looks at how we normally write our emails, and provides suggestions.

Smart Compose is one of the various use-cases of language models. A language model is a model trained for language-specific use cases like writing an email, translation, chat replies, etc. Language models are useful for a variety of language-related problems

Speech recognition — ability of a machine to identify words spoken aloud and convert them into readable text

Machine translation — translate content between languages without the involvement of human linguists

Natural language generation — generating text that appears to be written by a human, without the need for a human to actually write it

Part-of-speech tagging — categorising words in a text (corpus) in correspondence with a particular part of speech

OCR — converts an image of text into text format

Handwriting recognition and many more

Just like the model above is built on an algorithm, language models are also built on different algorithms. Some of the popular algorithms for language models (also relevant to chatGPT) are n-grams and deep neural networks. The data to train these models comes in the form of articles, books, emails, etc. written by humans in the past.

Now that we understand language models, let’s move on to what’s large in large language models.

Large Langauge Models

The goal of the LLMs is to predict the next word based on the words that came before it. To do this, large language models use deep neural network algorithms and are trained on vast amount of data (billions of words) to learn patterns within the language.

We add ‘large’ to LLMs not (just) because they are trained on vast quantities of data, but because of large number of parameters these models have. Look at the number of parameters various LLMs have. Don’t get daunted by model names :)

BERT-large has 340 million parameters whereas GPT-3 has over 175 billion parameters. GPT-4, which launched this week only, didn’t reveal the number of parameters but you can be sure that it will be much higher than GPT-3. And because of these large number of parameters, we need vast quantities of data to train. You may ask how vast are we talking about?

GPT-3 was trained on 499 billion tokens. A token is similar to a word (we won’t go into details of why we are calling them token here). That translates to roughly 100 billion sentences, assuming 5 tokens in a sentence.

In order to train LLMs with billions of parameters, massive amounts of data and computational power are required. OpenAI, for example, trained GPT-3 using a combination of 45 terabytes of text data and 3,175 NVIDIA V100 GPUs running in parallel. The training process took several weeks to complete.

What about the algorithms? Deep neural networks would need another post to get through so I would leave it here by saying that these algorithms need to have billions of parameters like w1, w2, …

Bringing it All Together

Looking at how LLMs are built explains why they are so powerful at predicting the next word in a sentence, which is the foundation of tools like chatGPT. While writing an answer, chatGPT is essentially writing (or better predicting) one word at a time based on the words that it has generated before. The answer generation in chatGPT interface actually mimics the ‘one word at a time’.

So what’s the application of whatever we just covered here in this section? For one thing, you can take LLMs like GPT as a base model and tune it by training it on a small amount of your product specific data. That way, you can create a model specific to your product.

Look at the ways this has been helpful to products out there, and you might get an idea to do so for your own product.

Salesforce Announces Einstein GPT, the World’s First Generative AI for CRM

Snap rolled out My AI a new chatbot running the latest version of OpenAI's GPT technology that they have customized for Snapchat

Instacart Joins ChatGPT Frenzy, Adding Chatbot To Grocery Shopping App

Quizlet Introducing Q-Chat, the world’s first AI tutor built with OpenAI’s ChatGPT

In future posts, we would talk about deep learning, transformers, self-attention, etc. which are relevant to building more knowledge around LLMs. But for now, this would be it for this week.

Hope you find a cool and useful application of LLMs in your product 🚀

Another update I wanted to share is I would be doing a free zoom event around ‘Building a Product Sense’ on 25th April. 500+ folks are already registered for it. You can register for the event on LinkedIn.

Have a good day!

Regards,

Deepak

This is an incredibly useful article Deepak. Thanks a lot for this, and please keep publishing such articles to simply things for PMs

AI has the potential to bring about significant benefits, but it must be guided by ethical principles and considerations. As AI continues to evolve, it is important to ensure that it is developed and used responsibly. The future of AI is exciting, but it also requires careful consideration and planning to ensure that its benefits are realized while minimizing its risks.